I’ve been watching a ton of playoff basketball lately, and amid the relentless torrent of Caitlin Clark State Farm ads and WingStop spots (no flex, zone!), there’s a Google Pixel 8 phone commercial in particular that never fails to bum me out.

The spot emphasizes the phone’s AI-powered editing tool that lets users manipulate pictures in all sorts of convincing ways. You can adjust still shots to make it look like you jumped higher, like you didn’t actually blink when the picture was taken, or like the sky was actually beautiful that day. There’s one specific moment in the ad where a dad is photographed tossing his kid up in the air, and the picture is further manipulated to look like he flung the kid higher than he actually did. These are all small things, sure, but part of the bummer is how all these adjustments reflect a particular fixation on wanting to memorialize things as better than they actually were. These tools help the picture-taker realize their ideal pictures more easily; put another way, they help the picture-taker lie better.

The Google ad came to mind as I was reading about an effort by a group called the Archival Producers Alliance (APA) to develop a set of guidelines around the use of generative-AI tools in documentary filmmaking. The push is well timed. You might’ve noticed some hubbub around filmmaking and generative AI of late. In the movies, the horror flick Late Night With the Devil drew curious ire for its use of AI to create title cards, which feels like a tricky thing to litigate. After all, movies use computer-generated imagery all the time (have you seen a Marvel film?), though I suppose in this case, a specific friction point lies in how the movie was lauded in part for its aesthetic texture.

The issue is more clearly pronounced in documentary and nonfiction filmmaking; recently, controversy percolated around a Netflix true-crime docuseries called What Jennifer Did, after a site called Futurism found that the production team appeared to use AI tools to produce photographs of the subject that never actually existed. Here we get much closer to the heart of the issue: the allure of using this technology to create fake archival material for the purposes of storytelling at the expense of actual reality.

It’s stuff like this that drives the effort by the APA — which was founded by Rachel Antell, Stephanie Jenkins, and Jen Petrucelli — to develop these gen-AI-documentary guidelines. The trio are all archival producers, meaning that they hail from a specific subsection of the documentary tradition that takes the sourcing, licensing, representation, and usage of archival materials very seriously. “It’s really a collaborative and creative art,” said Jenkins, who has also worked at Ken Burns’s Florentine Films since 2010. “What I try to do is encourage people watching docs to think about every single cut that has any archival in it, about how there was someone whose hands were on every piece of that material coming into a documentary.” As they put it, the impetus isn’t to be outright skeptical about generative AI, but simply to develop a resource that helps filmmakers handle the technology in a responsible way. At this writing, the guidelines are still being drafted in collaboration with hundreds of their documentary peers, and it’s my understanding they hope to get a finalized version out by the end of June.

You can read about portions of the draft in Indiewire, but intrigued by the initiative (and how its concerns will probably trickle down to podcasts sooner than later), I reached out to Antell and Jenkins to talk through what they’re doing.

How did this push to develop these guidelines come around?

Rachel Antell: Almost exactly a year ago, we started to see filmmaking teams create what we call “fake archivals” — visuals meant to be historical imagery, but were actually created using generative AI. We’re archival producers, and this was something that made us realize it was all kind of the Wild West. There wasn’t anything legislating, either legally or ethically, how these generative-AI tools are going to be used and the imagery they produced were going to be used within documentary film.

So we gathered a large group of other archival producers to discuss what we were seeing, and we then released an open letter about our concerns. The letter came out in November with signatures from over a hundred people within the field, and it was reprinted in The Hollywood Reporter. After that, we had some conversations with the International Documentary Association (IDA) about the need for guidelines around generative AI and how best to protect the integrity of documentary film, and the IDA invited us to present that at the Getting Real festival in April. That gave us a deadline, and we worked with a committee of about 15–20 archival producers to produce what’s now still a draft document. And I should note, we developed it in discussion with a wide range of experts: AI professionals, AI academics, documentary academics, and so on.

Could you tell me a little more about the “fake archival” you saw?

Antell: In one case, there was a historical figure who was not prominent but whose story was being told, and there weren’t any photos of this person. Cameras had been invented by this time, but it was a period when there weren’t a lot of photographs being taken. So in order to represent the person, the filmmakers chose to use generative AI to create something that looked like how someone from that time and demographic might have looked. It’s not so much the actual use of generative AI here that’s concerning, but the audience’s awareness that what they’re seeing isn’t an actual historical document.

So, I’ve had conversations with people working on generative-AI tools about this kind of stuff, and one argument that routinely comes up is this idea where the thinking would be along the lines of: “But isn’t it great that we get to see representation of a historical subject we otherwise wouldn’t be able to visualize?” What are your thoughts on that sentiment?

Stephanie Jenkins: We started out being freaked out by this technology, but we’ve also been talking to filmmakers who are using it in really amazing and creative ways. So it’s important to note that we want to embrace it while also protecting what it is that we do.

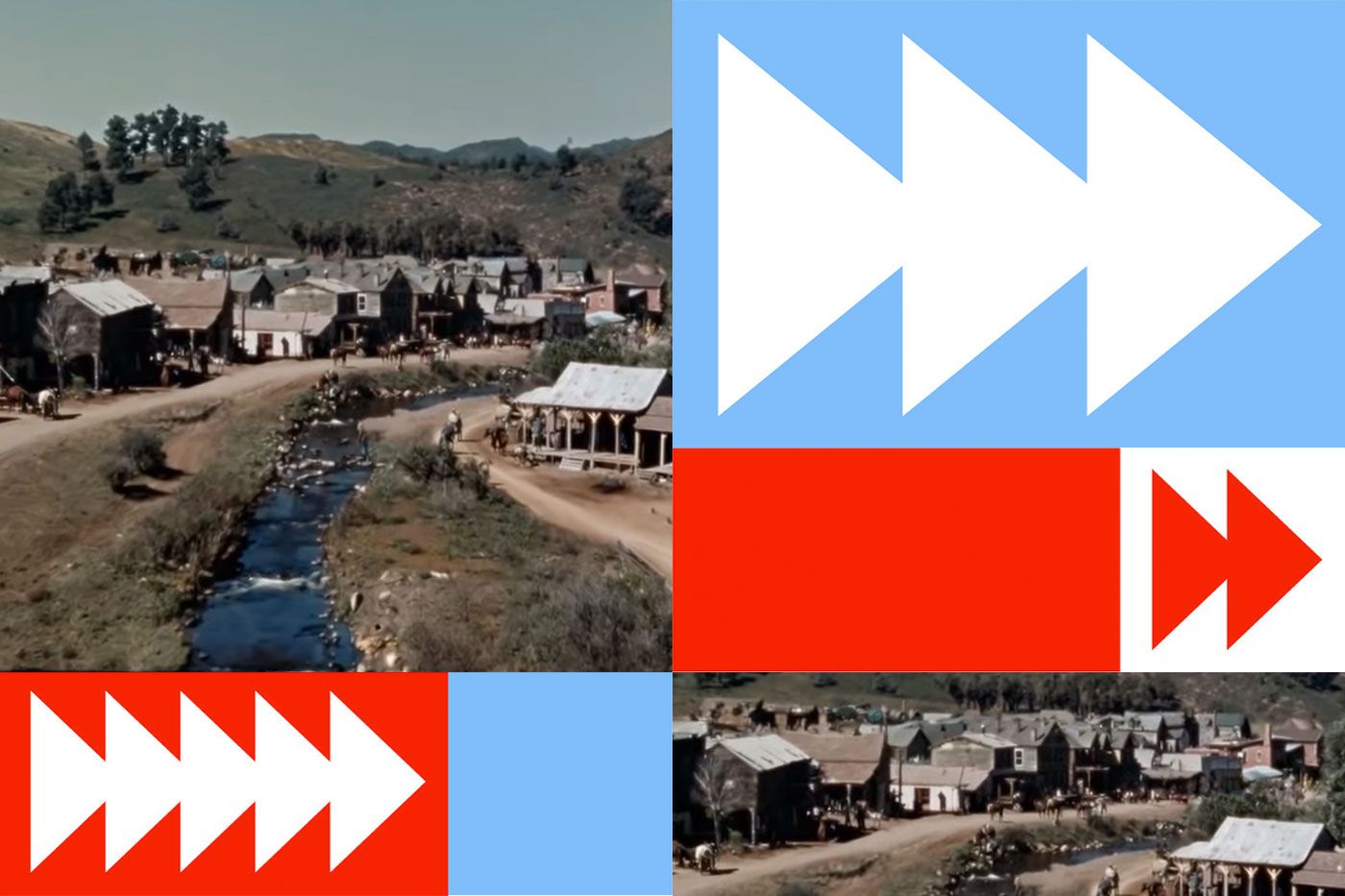

But there are a lot of reasons why that would be difficult. One example I’ve been citing is from the early launch of Sora; there was this video where the prompt was “historical video of the California Gold Rush.” If you look at it, you see a color drone shot that looks like it was made in the 1970s of a beautiful babbling brook and saloon-style buildings. One might say, “Look, it gets you closer to putting the viewer there,” but it’s actually important to know there were obviously no drone cameras at the time, so if what you’re trying to do is present this footage as a tangible thing, you’re getting as far away from what was available in the period as possible.

There’s also a lot of inaccuracies within that footage. This is the algorithmic bias piece: Whatever Sora uses to generate the video is pulling from the internet — so maybe it’s pulling from westerns, from Hollywood. That material doesn’t necessarily reflect the real demographics. They don’t reflect the human and environmental pillaging that happened during this time. They don’t reflect who was actually there and what their lives would’ve looked like. So, it’s really a disservice to the viewers to make something up based on these random data points already available to us on the internet.

Antell: One big thing about algorithmic bias is that it’s very hard to identify. When a human-made piece of media contains bias, which it generally will, the authorship and context can be known. So those biases can be framed and wrestled with. In contrast, synthetic media contains bias, but no known author. It carries presumed authority, but it has no accountability.

Jenkins: To be sure, there can be a temptation to use generative AI as a cheap fix to tell a story in a cinematic way. And what we say is, knowing the algorithmic bias, if you’re going to do any reenactment to imagine a space, do it as you would a human reenactment. Do the research. Get a sense of what people would’ve worn and what things would’ve been like. These details are the stuff of really good storytelling that help us relate to the subjects of our projects. It’s a disservice to make a reenactment just using generative AI if what you’re trying to do is tell a story of something that might not have existed otherwise. There are other creative ways to do it.

Speaking of reenactments: I’ve always thought that reenactments are the laziest form of documentary storytelling, but this makes me wonder if the problem raised by generative-AI use is just specific to reenactments as a narrative tool. Am I thinking too narrowly here, or does generative AI pose more fundamental risks to the language of documentary filmmaking writ large?

Antell: I think it’s too early to know. But we’ve been seeing applications creep into other aspects of historical documentaries as well.

Jenkins: And into true crime.

Antell: The two big problems that the documentary industry has always faced are time and money. Every doc producer is really squeezed. So I get that generative AI is a quick answer to both those things, but the question is this: at what cost? This is very much at the heart of our thinking.

Some of what we talk about in our guidelines is applicable to all kinds of media, but a lot of it really is focused on documentary. There are a lot of options out there. You can watch a docudrama. You can watch historical fiction. There’s nothing wrong with that. Those are great forms of media. But when you sit down to watch a documentary, you’re making certain assumptions about what you’re seeing — one of which is that what you’re seeing is true and real. And people don’t like to be deceived. In those cases, trust is something that’s quickly eroded and difficult to build back. There’s an inherent value in preserving the fact-based nature of documentary, so that it can continue to be a trusted cultural resource.

Jenkins: Again, we’re not trying to say people who use generative AI are bad. I’d like to shout out two films that helped shape our thinking on this: Another Body and Welcome to Chechnya. Those are two amazing uses of generative AI to protect the identities of the participants in the film. There’s an opportunity here for filmmakers who want to keep doing the deep research and this authentic primary-source-based storytelling — that it’s possible these will continue to be “premium products.” (Which, by the way, are two words I haven’t ever wanted to use to describe documentaries.) But I think it speaks to the fact that we’re in a changing media- and documentary-funding landscape. We’re trying to get in at this early stage so we can get our word out to executives and people funding documentaries to continue to fund the archival piece.

Tell me more about the current state of doc funding. My colleague Reeves Wiedeman had a feature on this about a year ago, but I’m curious about what you’ve seen since then.

Antell: We’re definitely seeing a contraction. There’s less work being produced right now.

Jenkins: I’ve been going to independent documentary festivals — the Camden Film Festival, the Big Sky Film Festival, etc. — and there’s a big glut of films right now being made but are not being purchased. And the films that are being bought are being offered less money that often doesn’t actually meet the budget of the film.

Because this newsletter usually tackles podcasts, I want to ask this: Have you been seeing similar generative-AI issues hit the audio field?

Jenkins: Honestly, not much yet, though we’ve been following the George Carlin story closely. It’s just a really complicated First Amendment and fair-use issue, and I thought it was interesting to see in that case that they settled out of court.

Antell: We do call out both for audio and visual materials that if you are creating something we would call a deep fake, where it represents an actual human being in history — whether their voice or their likeness — that you’d want to go the extra step beyond what’s legally required in terms of getting consent from either that person or their descendants. Because we do think there’s something a little higher risk at stake here, and because consent is an important part of the documentary-filmmaking process.

There’s a lot that’s unknown right now. We’ve tried to keep the guidelines broad in a lot of ways because the legal and technical landscape are evolving very rapidly, and we want to make sure that they can remain relevant while people are starting to navigate this in real time.

Jenkins: We’re hoping that this can be used as a continuation of documentary ethics and not a completely new thing. As a discipline, we’ve had to transform around different types of technology and different ethical concerns for the last hundred-plus years. There was a time when it was really controversial to use Photoshop to retouch a photograph, you know? It’s kind of expected now that if you want a photograph to look a certain way in your film, you might retouch it as long as it doesn’t fundamentally change the substance of it, but that’s a sliding scale. We hope to make sure that people are keeping their subjects, teams, and audiences in mind when they’re making these creative choices.

How are you thinking about the prospect of enforcing norms of accuracy and transparency around this stuff?

Jenkins: It’s important to point out the guidelines we’ll publish is an ethical document, not a legal one. Streamers will have to rely on their legal departments to make some of these decisions.

In our guidelines, we talk about keeping track of what prompts and gen-AI tools you use. We encourage filmmakers to credit any use of generative AI — audio, images, anything — and keep track of it throughout the process. This is a practical thing for insurance reasons, which is a bottom-line concern for everyone. If you use gen AI, you need to make sure that you’ll still be insured by your errors and omissions policy. There’s a world in which any gen-AI materials used is part of a deliverable of your film in the same way a music-cue sheet is a deliverable, but right now it’s really confusing to filmmakers what they’re even supposed to be doing and how to keep track of these things through a documentary-making process that often takes years to complete.

It’s also going to be really interesting to see how the public responds. I don’t want to comment directly on What Jennifer Did because I haven’t seen it yet, but the outcry there does speak to this just being the beginning of what I think will be a flood of erosion of trust by audiences. At the APA, we’re kind of the anti-misinformation and pro-democracy business, and even though we’re talking about perhaps a niche piece of the documentary-production world, it really does speak to larger issues around public conversations around truth.

Antell: I don’t think of it in terms of enforcement because it doesn’t feel like this is an adversarial thing. We’ve found that the documentary community is really hungry for guidelines. There’s excitement around using generative AI. There’s definitely a place for it, and I think it can be additive to the documentary field if it’s used in a way that doesn’t compromise general documentary integrity.

Jenkins: I love archival documentary because it works at a human pace and a human scale. There is something so fundamental about the human voice and the photographs we take of loved ones — and of news that’s shot in real time. These are things we should continue to treasure and hold up rather than pretend that you don’t need them in order to get a story across. These are the things of stories, and when you unearth a new piece of archival material and put it in a documentary, that gets passed along into the historic record.

The same thing happens with synthetic media, whether it’s real or not. That gets passed on and can be used in other people’s films, on YouTube, on educational resources. In my work, I’ve been able to unearth a lot of amazing footage and photographs that are now available to a lot of other people to use and consider, and that’s an important part of what we do as archival producers. I just really don’t want that to be lost or misunderstood by this new technology.

Related

- The Documentary World’s Identity Crisis

Nicholas Quah , 2024-05-07 18:54:16

Source link